Modern applications are no longer built to run on a single server or even a single cloud provider. They are designed to be dynamic, scalable, and resilient – capable of running across multiple Kubernetes clusters with minimal downtime. But with this flexibility comes complexity. How do organizations efficiently manage, deploy, and scale applications across different infrastructures while ensuring high availability and performance?

This is where the Kubernetes ecosystem comes in. Originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes has become the industry standard for container management. It provides automation for deploying, managing containerized workloads, and scaling applications, removing the manual processes involved that traditional infrastructure management requires.

For businesses running modern applications, Kubernetes is a strategic advantage. It allows organizations to optimize cloud costs, balance workloads, and ensure seamless updates without disrupting user experiences. Whether you’re a startup looking to scale quickly or an enterprise managing complex, multi-cluster management environments, Kubernetes offers the control and efficiency needed to stay competitive.

But what exactly is Kubernetes, how does it work, and why has it become an essential? Let’s explore.

Kubernetes Explained

Kubernetes (often abbreviated as K8s) is an open-source platform designed to automate the deployment, scaling, and management of containerized applications. Initially developed by Google in 2014 and later donated to the Cloud Native Computing Foundation (CNCF), Kubernetes has become the backbone of cloud-native technologies.

Kubernetes enables developers to manage application containers deployed across clusters of physical or virtual machines, ensuring efficiency and high availability. It addresses the challenge of running and connecting containers across multiple hosts, managing the complexities of high availability, and service discovery. Kubernetes is widely used by enterprises due to its ability to handle distributed workloads, streamline multi cluster management, and integrate seamlessly with public cloud providers.

How Kubernetes Works

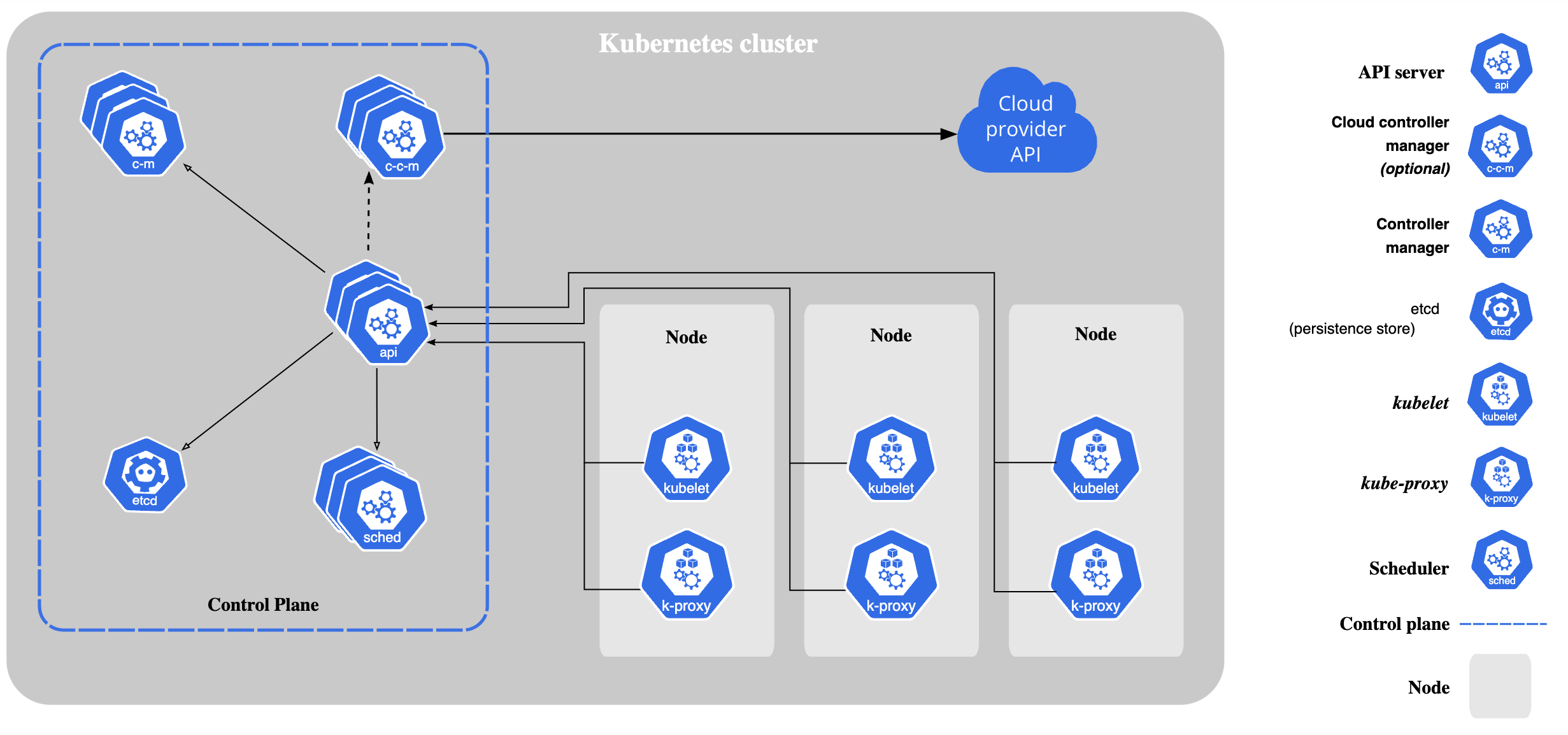

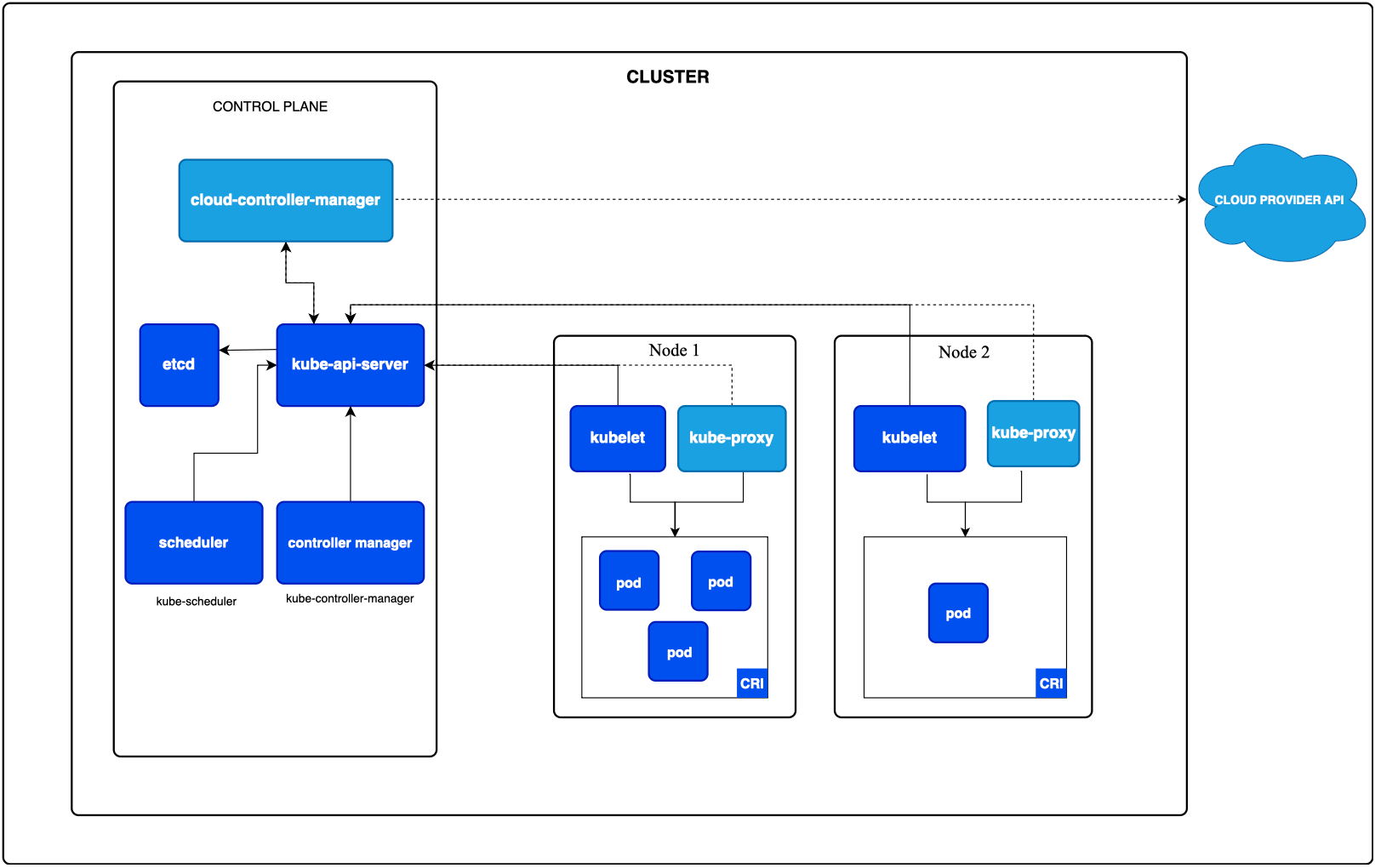

Kubernetes automates the deployment, scaling, and management of containerized applications by orchestrating workloads across a Kubernetes cluster. It consists of a control plane that maintains the system’s desired state and worker nodes that execute application workloads. When a user submits a Kubernetes deployment request, the API server processes it, updates the cluster state in etcd, and the Kubernetes scheduler assigns workloads to the most suitable nodes based on resource requirements.

Networking in Kubernetes enables service discovery and communication between containers deployed across different nodes. Each pod is assigned its own IP address, while Kube-proxy manages internal and external requests through dynamic routing. Applications needing persistent storage utilize underlying storage infrastructure, where Kubernetes provisions storage resources through persistent volumes (PVs) and claims (PVCs), ensuring data durability across restarts.

For high availability, Kubernetes supports multi-cluster management, allowing workloads to be distributed across multiple Kubernetes clusters for fault tolerance and scalability. Organizations leverage certified Kubernetes distributions to ensure compliance, security, and seamless workload portability across public cloud providers. By abstracting complex manual processes involved in traditional infrastructure management, Kubernetes streamlines managing containerized workloads and optimizes cloud-native application deployment.

Kubernetes Terms Explained: Clusters, Pods, Nodes & More

Kubernetes is built on key design principles that enable it to run applications reliably and at scale. It provides an abstraction layer over infrastructure, allowing development and operations teams managing containerized applications efficiently. To understand how Kubernetes works, let’s break down its key components:

Kubernetes Cluster

A Kubernetes cluster consists of a control plane and a set of worker nodes. The control plane is responsible for managing cluster operations, while the worker nodes run application workloads.

Control Plane

The Kubernetes control plane manages the entire Kubernetes cluster and ensures the desired state of deployed applications is maintained. It consists of several components:

• API Server (kube-apiserver): The entry point for all Kubernetes commands. It exposes the Kubernetes API and acts as the communication hub between internal components.

• Controller Manager (kube-controller-manager): Ensures that the cluster’s state matches the desired state defined by users, handling tasks such as node monitoring, job control, and endpoint management.

• Scheduler (kube-scheduler): Assigns workloads to available worker nodes based on resource requirements, availability, node health, and policies.

• etcd: A distributed key-value store that holds all cluster data, including configuration data, secrets, and metadata.

Kubernetes Nodes

Nodes are the individual machines in a Kubernetes cluster, running workloads and hosting the necessary compute resources. A node contains:

• Kubelet: The primary agent running on each node that communicates with the control plane and ensures the assigned containers are running.

• Container Runtime: The software responsible for running container images (e.g., Docker, containerd, or CRI-O).

• Kube Proxy: Manages networking within the cluster, ensuring communication between different services and nodes.

Kubectl

Kubectl is the command-line tool that interacts with the Kubernetes API to manage deployments, configurations, and logs.

Kubernetes Secret

A Kubernetes secret is used to store sensitive information like passwords, API keys, and certificates securely within a cluster.

Kubernetes Service

A Kubernetes service enables internal and external requests to reach deployed containers, ensuring seamless communication within and outside the cluster.

Pods and Containers

• Pods are the smallest deployable unit in Kubernetes, consisting of one or more containers deployed that share storage, networking, and configuration.

• Containers within a pod run the actual application processes.

Kubernetes Operator

A Kubernetes Operator is a method of packaging, deploying, and managing Kubernetes applications, extending the platform’s capabilities by automating complex operational tasks using custom controllers.

Kubernetes Deployment

A Kubernetes deployment allows you to describe your desired application deployment state. Kubernetes scheduler ensures the actual state matches your desired state, and maintains that state in the event one or more pods crash.

Three Core Design Principles:

- Declarative Configuration: Kubernetes users define the desired state of an application, and the control plane ensures that the system reaches that state automatically.

- Self-Healing Capabilities: Kubernetes can detect and replace failed instances, ensuring high availability.

- Automated Scaling: Kubernetes dynamically adjusts resources based on demand, optimizes infrastructure and tracks resource allocation.

Benefits of Using Kubernetes

Kubernetes offers numerous advantages for scaling containerized applications in production environments efficiently. Here are some of the key advantages:

Automated Deployment & Scaling: Kubernetes streamlines application deployment and scaling by integrating with continuous integration and continuous deployment (CI/CD) pipelines. Developers can define desired state configurations, and Kubernetes ensures that applications are deployed consistently across different environments. Additionally, Kubernetes enables horizontal and vertical scaling based on workload demands, dynamically adjusting the number of containers deployed to meet traffic spikes or reduce resource consumption during low-demand periods.

Resource Efficiency: Managing containerized workloads requires optimizing compute power, storage resources, and networking capacity. Kubernetes schedules and assigns workloads based on resource requirements, ensuring that no single node is over-utilized while others remain idle. By dynamically adjusting storage resources and scaling based on demand, Kubernetes improves efficiency while minimizing unnecessary infrastructure costs.

Load Balancing & Traffic Management: As businesses deploy multiple applications across clusters, efficient load balancing becomes essential to maintain performance. Kubernetes automatically distributes workloads across nodes and uses built-in service discoverymechanisms to ensure that requests reach the correct service instances. Ingress controllers and network proxies help route internal and external requests, optimizing traffic flow and preventing bottlenecks in high-demand scenarios.

Multi-Cloud & Hybrid Cloud Support: Many organizations combine on-site data centers with public or private cloud solutions, and balance workloads between multiple cloud providers to take advantage of changes in pricing and service levels. Kubernetes works across multiple cloud providers and bare metal servers, offering deployment flexibility.

Management of complex environments: Micro-services in containers make it easier to orchestrate services, including storage, networking, and security, but they also significantly multiply the number of containers in your environment, increasing complexity. Kubernetes groups containers into pods, helping you schedule workloads and provide necessary services, like networking and storage, to those containers.

Self-Healing & Fault Tolerance: Kubernetes ensures high availability by constantly monitoring the health of application containers. If a container fails, Kubernetes automatically restarts it or schedules it on a different node to prevent downtime. Additionally, it uses replica sets to maintain the desired number of active instances, ensuring that services remain available even if a node crashes or a failure occurs in the underlying storage infrastructure.

Security & Compliance: Enterprises require robust security measures when managing cloud environments. Kubernetes supports certified Kubernetes deployments, role-based access control (RBAC), and secrets management to safeguard sensitive data like API keys and credentials. Additionally, Kubernetes integrates with compliance frameworks, ensuring that organizations meet regulatory requirements for data storage and application security. By isolating workloads and enforcing strict access policies, Kubernetes enhances security across multi-cloud and hybrid environments.

Most Common Kubernetes Use-Cases

Kubernetes has become a foundational technology across industries, enabling businesses to scale, automate, and manage applications more efficiently. From modernizing legacy applications to streamlining DevOps and AI workloads, Kubernetes offers solutions tailored to various enterprise needs. Below are some of the most impactful use cases, based on industry insights and real-world implementations.

1. Application Modernization

Many enterprises still rely on legacy applications running on traditional infrastructure. These monolithic applications can be challenging to maintain, scale, and integrate with modern cloud-native environments. Kubernetes provides a seamless way to containerize and orchestrate these applications, allowing businesses to modernize without completely rewriting their codebase.

By breaking monolithic applications into containerized microservices, organizations can enhance scalability, improve resilience, and reduce operational overhead. Kubernetes ensures that these modernized applications can run efficiently across hybrid and multi-cloud environments, making it easier to integrate with newer technologies like AI, IoT, and big data processing.

2. Edge Computing and IoT

As businesses generate vast amounts of data at the edge, from IoT devices, remote sensors, and autonomous systems, processing this data centrally in a cloud environment can introduce latency and inefficiencies. Kubernetes extends container orchestration to the edge, enabling real-time data processing closer to the source.

With Kubernetes, enterprises can deploy workloads to edge locations dynamically, ensuring that IoT applications operate with low latency and high availability. For example, in the manufacturing sector, Kubernetes manages edge-based predictive maintenance systems that analyze equipment data in real time, reducing downtime and improving operational efficiency.

3. DevOps and Continuous Delivery

Kubernetes is a cornerstone of DevOps, streamlining continuous integration and continuous delivery (CI/CD) pipelines. It enables developers to automate application deployments, updates, and rollbacks with minimal human intervention. By using Kubernetes in a DevOps workflow, teams can achieve faster release cycles, reduce deployment errors, and ensure application consistency across development, testing, and production environments.

Kubernetes supports blue-green deployments, canary releases, and rolling updates, allowing businesses to introduce new features with zero downtime. This use case is particularly valuable for e-commerce, SaaS platforms, and other industries where frequent updates and high availability are critical.

4. AI and Machine Learning Model Deployment

Deploying AI and machine learning models in production requires efficient management of computing resources, especially GPUs and specialized hardware. Kubernetes enables organizations to orchestrate and scale AI workloads dynamically, ensuring optimal performance while reducing infrastructure costs.

With Kubernetes, businesses can automate model training, inference, and retraining workflows. This is crucial for AI-driven industries such as healthcare (medical image analysis), finance (fraud detection), and retail (personalized recommendations). Kubernetes ensures that machine learning models remain scalable, portable, and easy to deploy across cloud and on-premises environments.

5. Disaster Recovery and High Availability

Ensuring business continuity in the face of infrastructure failures, cyber threats, or natural disasters is a top priority for enterprises. Kubernetes provides built-in self-healing capabilities and automated failover mechanisms that help organizations maintain application availability.

With Kubernetes, workloads can be replicated across multiple cloud regions or data centers, ensuring redundancy and fault tolerance. In the event of a failure, Kubernetes automatically reschedules affected workloads to healthy nodes, minimizing downtime. This makes Kubernetes an ideal solution for financial services, telecommunications, and mission-critical applications where uptime is essential.

Final Thoughts

Kubernetes has transformed cloud-native development by enabling businesses to automate and scale containerized applications efficiently. Whether managing microservices, AI workloads, or multi-cloud deployments, Kubernetes offers flexibility and reliability for modern IT environments. As organizations increasingly adopt new technologies, Kubernetes remains the go-to platform for container orchestration.

For companies looking to optimize their cloud operations, and streamline their data storage and request storage resources, Kubernetes provides the necessary tools to achieve automation, scalability, and security without unnecessary complexity. If you’re considering Kubernetes for your organization, now is the time to explore its full potential.

Looking to scale your business with Kubernetes? Book a Meeting!

FAQs

What is Kubernetes?

Kubernetes is an open-source platform for container orchestration that automates the deployment, scaling, and management of containerized applications. It simplifies infrastructure management and enhances reliability.

What is Kubernetes used for?

Kubernetes is used to automate the deployment, scaling, and management of containerized applications across cloud and on-premises environments. It enables businesses to orchestrate workloads, optimize resource allocation, and ensure high availability for applications in production environments.

How does Docker and Kubernetes work together?

Docker is a container runtime that packages applications, while Kubernetes orchestrates and manages these containers at scale.

What is Kubernetes vs Docker?

Docker is used for container technologies, whereas Kubernetes is responsible for orchestrating and managing them across clusters.

What would I use Kubernetes for?

Kubernetes is used for container orchestration, microservices management, CI/CD automation, and application deployment.

What is Kubernetes for dummies?

Kubernetes is a system that automates the deployment and scaling of software applications using containers.

What is a Kubernetes example?

A common Kubernetes use case is deploying a web application with microservices across cloud environments.

Why does everyone use Kubernetes?

Kubernetes provides automation, scalability, security, and cost efficiency, making it ideal for modern applications.

Do we really need Kubernetes?

For small applications, Kubernetes may be overkill. However, for large-scale distributed applications, it significantly improves performance, automation, and reliability.