“Inspired by our CloudNative Vilnius presentation by our great engineering Master Yoda Andrius.”

Who needs security?

Kafka Security is a relatively new topic across the software engineering realm. However, it’s most required when developing a reliable and secure solution for multi-tenancy microservice isolation. Have you ever had a situation where you needed to host all your tenants or clients on the same infrastructure? In databases, you can have a very dynamic role or permissions scheme! But what can we do when the data hits the “fan” – I mean Kafka!? Let’s start from the beginning.

Based on Wikipedia, Kafka was a German-speaking Bohemian-Jewish novelist. Based on the same principles of his work, which fuse elements of realism and the fantastic realms, we would try to fuse agile GitOps and Security in the solution below.

Apache Kafka is a distributed event store and stream-processing platform. It is an open-source system developed by the Apache Software Foundation and written in Java and Scala. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds, making it the best choice so far for an inter-service communication solution.

How we met…

Our team met the Kafka security stack in various banking and fintech solutions. It was a pain… Imagine you have 300 Tenants multiplied by 35 microservices, and you need to make sure that tenant “A” does not have access to tenant “B” and microservice “A” cannot read messages on the events group of microservice “B” – the whole idea makes you shiver! The basic rule from the AWS documentation looks like this:

/bin/kafka-acls.sh --authorizer-properties zookeeper.connect=ZooKeeper-Connection-String --add --allow-principal "User:CN=Distinguished-Name" --operation Read --group=* --topic Topic-NameYou need to produce that for each topic, or topic rule, combined with a group and different users – and definitely the Confluent Kafka part differs.

So we spent some sleepless weeks trying to understand all the pros and cons of different solutions like Strimzi and native Kafka ACLs, and as always, we invented the wheel a new and fresh one – but working in a cross-cloud manner.

Why do that?

In our current company, we are building a solution that (as we see it) should revolutionize the DevOps concept by enabling developers to become real DevOps. Such an obligation requires us to support a variety of different cloud solutions like GCP, AWS, or even Azure and ensure that all the components we provide work similarly across different clouds. That’s why we’ve chosen a multi-cloud approach—it’s easier to support when an interface does not depend on the cloud!

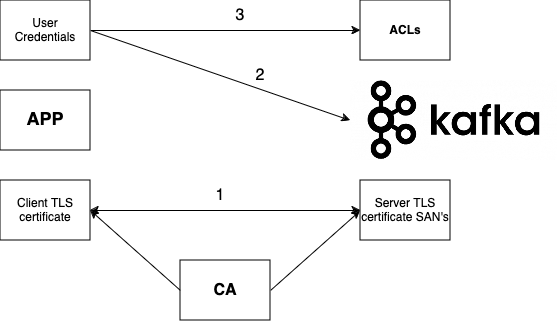

The generic core concept can be explained pretty easily (img. 1):

- We enable and use the SASL Kafka provider to enable authentication with TLS on top;

- We use native Strimzi operator capability to generate and control users (in “pure” Kafka deployments) and Terraform (OpenTofu) for AWS MSK Kafka implementation;

- We use native Kafka ACL (with some steroids) to implement the ACL concept.

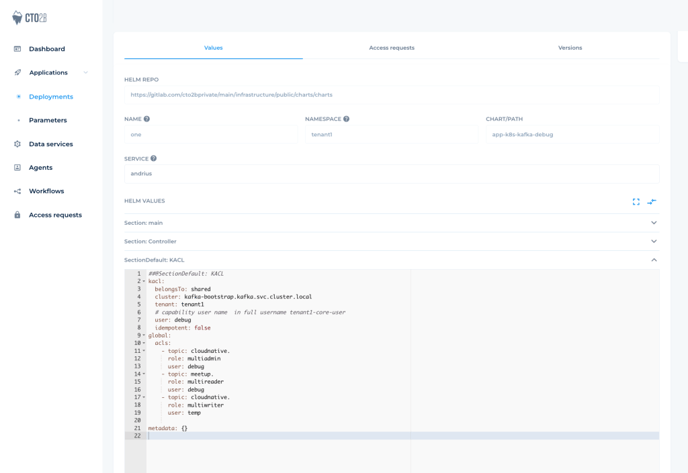

After some research, we found the OpenSource Kafka GitOps toolkit to fit the scheme – it works in a best-state machine concept and synchronizes the target Kafka cluster to a state we need. On the top of the solution, as a cherry – we added our own integration with a DevOps stack we build via the K8S Operator – which combines all the bits and pieces into a single KafkaACL (KACL) CRD and does a full integration into the service deployment flow. So the final solution which is exposed to our customers looks like a charm:

Or in a code view (example):

##@SectionDefault: KACL

kacl:

belongsTo: shared

cluster: kafka-bootstrap.kafka.svc.cluster.local

tenant: tenant1 # capability user name in full username tenant1-core-user

user: debug

idempotent: false

global:

acls:

– topic: cloudnative.

role: multiadmin

user: debug

– topic: meetup.

role: multireader

user: debug

– topic: cloudnative.

role: multiwriter

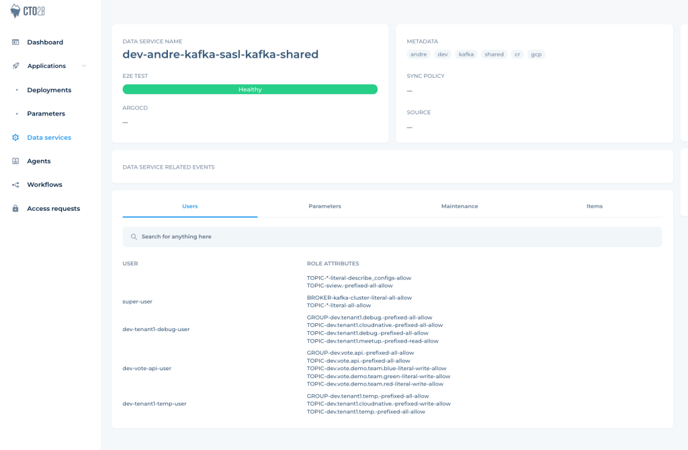

user: tempWhich would give a pretty good ACL result shown on image below:

You can add as many definitions as you want in a separate resource for each of the users, easy like that! And the format is “platform” independent.

Conclusion

Kafka is a great tool; sometimes, you need to add a bit of security to make it much more trusted. There are numerous ways you can make it happen in DevOps automation. However, some need to be simplified, made more accessible to understand, or even integrated into the GitOps flow. That’s why we, as a multi-cloud DevOps company, have chosen the path of combining all the best concepts into one – the final KafkaACL operator, which suits both – native Kafka and AWS MSK together.